Scaling Tuple's Service to Millions

Mikey Pulaski · @YoungDynastyNet · November 7, 2022

Since Tuple launched, there have been 4 different services used to track user presence and securely exchange messages between clients to start calls1.

Each transition has had its own pain points, and I don’t say that lightly: swapping out network stacks in a native app while maintaining protocol compatibility is a real vibe.

Let’s start by taking a quick look at the different solutions we’ve used over the years to get a better idea of the problems we’ve had to solve as we’ve grown, and how we ended up rolling our own blog-worthy service.

From Action Cable to Ably

Before I joined, there were questionable decisions made when it came to scaling, but that’s super fair: the main goal up until that point was to get the product out and validate it.

Tuple launched using Action Cable to simplify operational overhead and keep everything running as a single service. Once the COVID hit, though, it quickly became a dumpster fire.

The service was running on Heroku with all of its Dynos maxed out. Despite this, it had to be monitored around the clock and restarted every few hours. Sprinkle a little pandemic in the mix, and you had the perfect recipe for a very bad time.

There were really only two choices to be made: continue maintaining it and figure out a way to scale, or find a provider which would do the heavy lifting.

It was ultimately decided to migrate clients over to Ably, which is a fully managed service where you pay based on usage. Ably provides several SDKs, including one in Objective-C which could be used natively.

There were issues related to Ably’s client stability, but we were able to commit fixes and have them merged upstream after I joined. I’m kind of a dinosaur on the Mac, so I’m pretty comfortable with Objective-C.

Regardless, migrating to Ably was a huge win: we certainly never lost any sleep over service outages and could focus on building extremely important user-facing features, like sending confetti celebrations to your pair.

Getting ready for Linux

Fast forward a year or two later, and we found ourselves wanting to target platforms other than the Mac. Linux, by popular demand, was at the top of the list.

Tuple’s engine relies on WebRTC, which we use directly rather than through a browser (or Electron). This lets us reduce overhead significantly and tweak codecs to deliver high-resolution screen shares with crisp text. WebRTC is written in C++, and up until this point we’ve chosen to tread very lightly around its code base to avoid complexity.

On the Mac side of things, we could interface with WebRTC through its Objective-C adapters which play nicely with Swift. The adapters let us maintain our protocol-specific code in Swift, iterate quickly with less boilerplate code, and keep the C++ demon in its cage. This wouldn’t really suit us moving forward, though: Swift isn’t a language we’d like to bet on using across different platforms.

The writing was on the wall: we were going to have to double down on C++ development. Time to enter the thunderdome!

Firebase to the rescue

If we were going to invest more in our C++ codebase, we’d need to figure out how to bring Ably along. The only problem was, Ably doesn’t provide a C/C++ client, probably because they aren’t masochists.

As you can imagine, most service providers don’t provide bindings to lower level languages, let alone provide support for Linux. We were happy to find one exception: Google’s Firebase.

Firebase is a collection of services that can be used as building blocks to deliver real-time features, like user presence2. It’s popular with game developers, so they have an actively maintained C++ SDK. Score one for Tuple. Our only apprehension was that Google has a history of killing off its products, but we felt fairly confident that Firebase might stand the test of time.

Firebase was going to be our knight in shining armor. Or so we thought…

Side-loading Firebase

After a few weeks, we had a drop-in replacement for our Ably integration ready. The founders still had recurring nightmares about the migration from Action Cable, so we decided to try something clever. We weren’t jumping from a burning ship so we had time on our side to experiment.

Our plan was to ship our Firebase integration alongside our existing Ably one so that both services could talk to each other. We built in a remote switch that we could use to roll specific users or teams to Firebase, all while maintaining compatibility with users who were still using Ably. This would let us switch users incrementally and keep an eye on our support queue. If our experiment started to fail, then we’d just switch everyone back to using Ably without needing to deploy client updates.3

Over the course of a few days, it became clear that Firebase did not work as advertised: some people would appear online indefinitely if their clients disconnected unexpectedly. This wouldn’t be a show stopper if it was a bug within the client, as it’s open source, but the problem appeared to originate remotely.

We spoke with Firebase support, opened up issues, and even were able to provide reproducible test cases, but we were told repeatedly that things were working as expected. It wasn’t until a year later that they acknowledged / fixed the bug.4

After taking a little time to lick our wounds and accept the fact that no one would save us, we knew we really only had two options: write our own Ably client, or write our own service.

It seemed like it was a good time to invest in ourselves.

Our solution: The Realness

Prior to joining Tuple, I took a few years off from developing platform-specific code and wrote real-time microservices in Go. I had a high degree of confidence that we could create our own service and make it scale. It was our destiny ✨

This time around, we knew we had a market fit with a very active user base, and knew exactly what features we’d need in our own service. This would make it much less complex to deliver a solution without extra cruft.

I was able to write our service5 mostly over the span of a few weeks: it felt so good to be writing Go again and reasoning about concurrency more naturally. Although I took the lead, I’d Tuple with other members on the team every day so that the service became familiar to everyone.

We wrote loads of tests together to simplify the amount of time it would take to validate each requirement we had for the service. Each server is backed by Redis in a way such that data from an unhealthy instance would be automatically deleted. Each test case uses actors connected to different servers, backed by Redis server(s). This made it relatively straightforward to cover tricky scenarios like scaling up or down, nodes dying unexpectedly, or replaying missed messages with microsecond precision.

Rolling it out

Once our service was ready, we started placing bets on whether or not we could bring it to its knees. Matt, one of the other engineers at Tuple, worked on setting up a stress testing environment which he deployed to multiple regions across the globe. I guess you could say things were getting pretty serious.

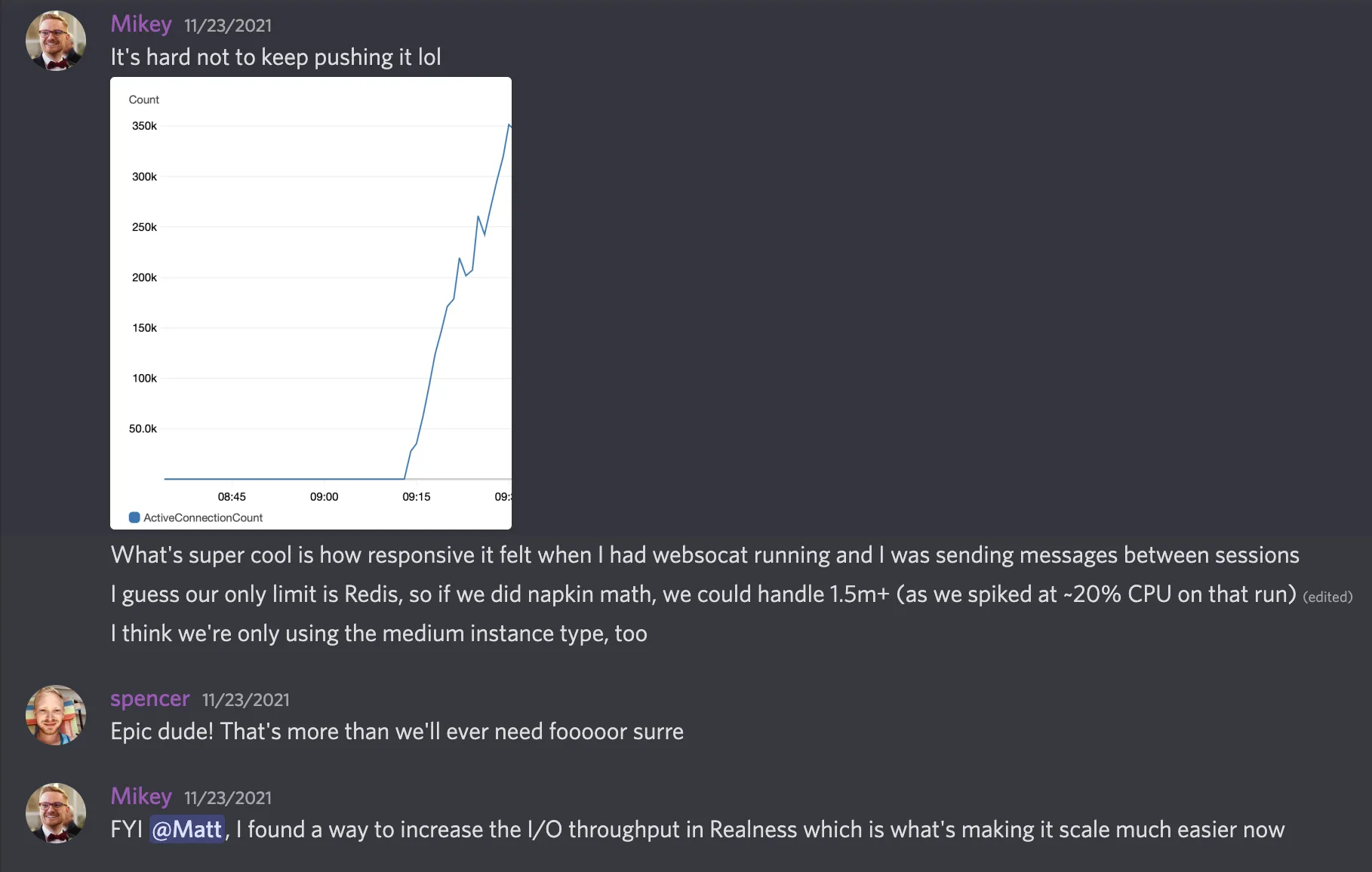

With a fleet of virtual honey badgers ready to attack our service, we felt drunk with nerd power. Over the course of a few runs, we managed to get up to a few million concurrent connections while maintaining round-trip times within milliseconds. Honestly, we never found the service’s limit, but we figured a few million should be our baseline because it sounded cool.

With our stress tests running, we validated that our servers would auto-scale and distribute their workload across multiple zones on demand. At this point, we felt pretty good about trying the side-loading approach again.

We deployed our home-brewed solution alongside Ably using the same approach as before, but we didn’t need to do any rollbacks this time. While none of our user’s noticed, we knew we hit a major milestone and had a solid foundation to start porting Tuple’s engine to other platforms. Our engineering channel on Discord was lit — sometimes the best releases are ones where you can hear crickets chirp.

Closing thoughts

Today, our real-time service powers both our Linux and Mac apps using a small, portable C++ client. It went live over a year ago and it hasn’t required any code changes in about 10 months. We hardly have to think about its maintenance and it’s added zero stress to our workload.

Although we’ve switched our real-time infrastructure out a few times, we were able to move fast and not break things. We never felt like we were blocked from delivering new features while any of the transitions were happening behind the scenes. It really paid off to keep things simple until we were forced to solve problems which were no longer hypothetical.

I hope you enjoyed reading about part of our journey.

Until next time!

About the author

Hi! I’m Mikey, the first full-time engineer at Tuple. I was hired in the height of COVID and have helped Tuple scale while the world went remote. Since I’ve been on the team, my role has grown from being the Mac expert to a software architect, where I help shape our engine to grow alongside new hires, platforms and features.

You can find me on lurking on Twitter @YoungDynastyNet.

Footnotes

-

See https://webrtc.org/getting-started/peer-connections for more information. ↩

-

Their documentation uses presence as an example application. They also have blog about it. ↩

-

This is something that’s easy to take for granted when targeting the web, but updating binaries running locally on people’s machines is a different beast which requires a lot more coordination. It also can be disruptive to people who are actively on a Tuple call. ↩

-

Better late than never! See this issue on their JS SDK. ↩

-

After binge-watching Drag Race during COVID, I named the service the “Realness”. If you’re a fan of the show, you’re probably gooped and gagged. ↩